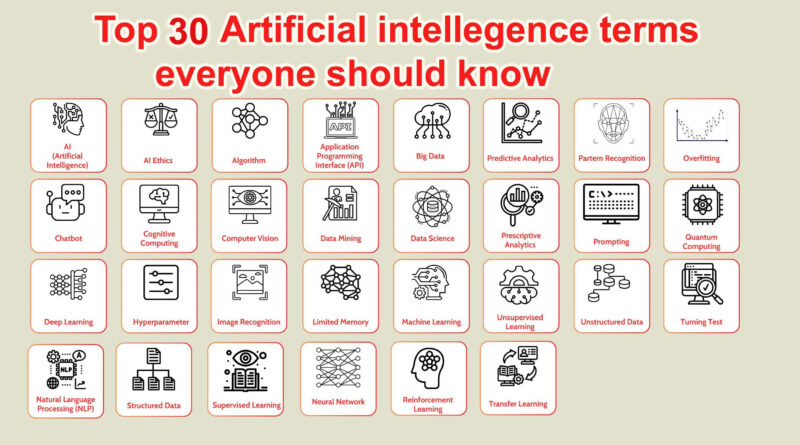

Top 30 Artificial intelligence terms everyone should know

AI, or Artificial Intelligence, Machine Top 30 Artificial intelligence terms Learning and Deep Learning actually mean the same thing, and different people define them in different ways.

To any business venture, IT, or software project, such classifications of AI, machine learning, and deep learning could be significant. This is why every individual should be abreast with the Top 30 Artificial intelligence terms starting with the following fundamental ideas.

Artificial Intelligence (AI)

Like the relationship between business intelligence and analytics, AI or Artificial Intelligence is the general field that contains machine learning and deep learning. It refers to a branch of Artificial Intelligence that is focused on designing tools that can have the cognition level of human beings. Some of the activities are reasoning, learning, problem solving, perception and language acquisition. AI can be defined as the technique of designing devices that act as human beings by means of computer systems.

Machine Learning (ML)

Machine learning can be regarded as the part of AI that concerns with the schemes and tools which help systems to optimise their results in tasks with experience. Thus, data enables an ML model to acquire patterns and come up with decisions or predictions. For instance, a recommendation engine that exists in music streaming services is an application of machine learning used to provide recommendations based on the user’s habits. Another one of the 30 AI terms every individual should familiarize themselves with due to its universality in today’s leading industries.

Deep Learning (DL)

One ‘type’ of machine learning is called Deep learning, which is a neural network with many layers to analyze various features of data. Deep learning models are, therefore, complicated and possess a lot of strength, as their structures mimics the human brain. This technique is most proficient while dealing with large volumes of data that are complicated and unsorted such as images, audio, and text. Some of the most common uses proven to be useful are image recognition, NATURAL LANGUAGE PROCESSING and even self-driving cars.

Algorithm

If you want to comprehend complex terms of artificial intelligence, then Top 30 Artificial intelligence terms everyone should know is a must-read guide. Algorithms are an essential component of artificial intelligence systems as they represent the bases of AI. In this section, we will explain the workings of algorithms as used in AI, why they are crucial, and provide examples of such algorithms.

What is an Algorithm?

An algorithm can be defined as a finite sequence of steps, which defines an operation that can be executed by a system. In artificial intelligence, algorithms are used to work on information and make decision as well as acquire knowledge from previous experience. These are basic to AI systems and may be as simple as arithmetic operations or are complex decision-making processes.

Algorithms and their Role in AI

This is important in artificial intelligence because algorithms are used to facilitate the processing of information in machines and assist in their decision making. They enable the AI systems to parse big data sets and enhance the solutions’ efficiency and accuracy. Algorithms are the key that made it possible for today’s AI to develop.

Different Kinds of Algorithms used in AI

**Machine Learning Algorithms**: Some of these are the supervised learning algorithms, unsupervised learning algorithms, and reinforcement learning algorithms. They help systems be able to adapt to the data and make itself better without having to be taught. undefined **Neural Networks**: These are used in deep learning and the name originates from the fact that they are modeled after the human brain. undefined **Genetic Algorithms**: These are algorithms used in solving problems that are based on the theories of evolution and heredity. undefined **Heuristic Algorithms**: These offer realistic approaches to addressing these issues since they apply experiential approaches towards problem solving, knowledge acquisition and learning. undefined **Decision Trees**: Popular in classification and regression analysis, they help to model decisions in the form of a tree of choices which are easy to comprehend.

Artificial Neural Network (ANN)

ANN stands for Artificial Neural Network, a term that makes up part of the 30 terms that you need to know about artificial intelligence. ANN is a model derived from the neural networks present in the human brains and which handles information in a proficient manner. It holds a significant position in different AI applications like image and speech recognition, natural language processing, and others.

Structure of ANN

An Artificial Neural Network is a network of nodes arranged in layers with the connections between the nodes. In other words, each of the nodes or neurons in the network takes in the data of the layer and then in turn passes on the data to the next layer. The common layers in these types of neural networks usually comprise an input layer, hidden layer, and the output layer.

Training and Learning

When training an ANN, the network is provided with large volumes of data and the weights of the interneuronal connections are tuned to improve the predictive accuracy. This process is referred to as learning and is similar to the way humans are able to learn from experience. Among the top 30 Artificial intelligence terms everyone should know here is the backpropagation, it’s an algorithm used to train ANNs.

Applications

There are many applications of Artificial Neural Networks in different fields. In healthcare, they can predict heath of the patient and diagnose the diseases. In finance, they are used for risk assessment as well as to detect fraudulent activities. In the automotive industry, the ANNs help in creating self-driving automobiles.

Natural Language Processing (NLP)

Among the Top 30 AI terms everyone should know are the following Natural Language Processing (NLP), NLP is a subfield of artificial intelligence that is the interaction of people and computers through natural language. NLP plays an important role of facilitating the machines in understanding, interpreting, and generation of the natural human languages; therefore, it plays an important role in most of the applications of AI.

Understanding NLP

Natural Language Processing is the method that turns ‘words to the computer’ and makes the computer understand them. It encompasses many elaborate workflows including natural language modeling, parsing, and others. It is applied in routine services such as the use of chatbots, virtual assistants and language translation among others.

Computer Vision

List of 30 most important Artificial intelligence terms every one should be familiar with include computer vision, it is a crucial subfield of AI that lets the computers see and make decisions on what they see. This technology is fundamentally important to numerous appplications ranging from facial recognition to self-driving automobiles.

Introduction to Computer Vision

Computer vision therefore encompasses methods through which existing as well as new images and video clips can be analyzed by computers. Some of the major processes are image categorization, localization and segmentation. Such processes make it possible for systems to detect objects, recognize their location and also segment them if they are of different types.

Supervised Learning

When coming up with the list of Top 30 Artificial intelligence terms everyone should know, it is impossible to leave out the Supervised Learning. This form of the concept entails feeding a machine data which comes with certain labels, a way of having the machine learn to predict or make decisions based on the input/output format. Supervised learning can be explained in terms of certain principles that are peculiar to it, and here it is useful to discuss these principles in order to understand the part played by supervised learning in AI.

Training Data

Supervised learning, on the other hand, is used when the input and the output of the information that the machine is given have well-defined distinctions. This labeled data is very important as it is the raw data on which model learning is based on. this is the case because accurate predictions are difficult to attain with inadequate quality of the training data.

Unsupervised Learning

It’s unfortunate that many of the Top 30 Artificial intelligence terms everyone should know mistakenly categorize “Unsupervised Learning” as one among the fundamental ideas of artificial intelligence. Supervised learning is one type of machine learning in which the system learns from the given data which are labeled but in unsupervised learning the data is unlabeled. It is efficient when finding latent patterns or the intrinsic structures of the input data for analysis.

Reinforcement Learning

Among the list of Top 30 Artificial intelligence terms everyone should know, Reinforcement Learning is considered to be one of the essential of them. Reinforcement Learning is a sub category of machine learning where an agent has to make decisions through a definite environment. The goal is to achieve the highest possible overall reward by acting in an environment and noting the consequences.

Basic Ideas of Reinforcement Learning

In Reinforcement Learning, such important concepts as agents, environment, states, actions, and rewards are used. An agent makes decision in a environment where on executing an action the environment is changed and a reward is received. Thus, with time, the agent is able to make decisions that will lead to the maximization of the total reward.

Big Data

Big Data is among the thirty-two AI terms that every person needs to know. It applies to the large sets of formatted and unformatted information that is produced each minute. The data can be monumental and elaborate that conventional modes of data handling are insufficient for capturing and analyzing it.

Characteristics of Big Data

Big Data is often described by the three Vs: The three Vs of big data namely: Volume, Velocity, and Variety. The four Vs of big data include volume which talks of the quantity of data, velocity which refers to the speed at which data is created and processed, and variety which addresses the dissimilarities of the data. Information about these characteristics will be useful in comprehending why Big Data is relevant in AI.

Predictive Analytics

It is therefore important to master the Top 30 Artificial intelligence terms everyone should know and one of them is predictive analytics. Predictive analytics deals with future occurrences and uses a combination of past data and machine learning and statistical models. This technique is very important especially for any business organization, which intends to operate on big data.

Importance in AI

Forecasts play a critical role in artificial intelligence in general. It enables the organization to predict trends, behaviors of the customers, and even risks in data. This term is on the list of the 30 AI terms that every layman should be aware of because it increases operational effectiveness and planning.

Model

The list of Top 30 Artificial intelligence terms everyone should know consists of key ideas and products that lie at the core of the AI field. These concepts will aid in explaining the field of artificial intelligence and its rapidly growing advancements.

Algorithm

An algorithm is defined as a computer procedure or program to accomplish a specific task or to solve a particular problem. In the case of AI, algorithms are used to analyze data and arrive at solutions or form decisions.

Machine Learning

AI can also be divided into machine learning where models are trained with massive data to identify the pattern for prediction.

Feature Engineering

The list of Top 30 Artificial intelligence terms everyone should know comprises of many basic ideas that are negligible but that play a very important role in machine learning and AI. One of these is Feature Engineering, wherein the input data, or features, are transformed to be more directly relevant to the problem to be solved by the predictive models to increase the models’ accuracy.

Gradient Descent

On ‘The Top 30 Artificial intelligence terms everyone should know ‘ list, Gradient Descent is a great example of an optimization algorithm used in the real world of artificial intelligence. Gradient Descent is used in the sense that it assist in reducing the cost function then arrive at a point of optimal parameters in the given model by managing the parameters updates.

How Gradient Descent Works

In fact, the original approach that lies at the very foundation of Gradient descent is the process of taking steps along the direction that is proportional to the negative gradient of the function in the current point. This process is continued until the value of function reaches a minimum value and this is called convergence. Gradient can be described as the direction and the steepness of the steepest rise in the function while ‘descent’ can be defined as a process or journey in the opposite direction of steepest slope to the goal of minimizing the cost function.

Confusion Matrix

Best 30 Artificial intelligence terms that everyone should know contain some of the basic parameters like the Confusion Matrix. Confusion Matrix is a powerful technique which is frequently utilized in the assessment of executed classifications in machine learning.

Understanding the Confusion Matrix

A Confusion Matrix is a table that is employed to give the details of the efficiency of a given classification model. The four updated types are based on the categories created by dividing predicted and actual values into two groups each. Some of these combinations are True Positive abbreviated TP, FP stands for False Positive, TN for True Negative and FN for False Negative.

Precision and Recall

When it comes to Top 30 Artificial intelligence terms everyone should know, it is highly important to mention precision and recall metrics, with which it will be possible to assess the results of classification models. They give information as to how well and how thoroughly the model can predict outcomes.

Understanding Precision

Accuracy is calculated in a way that reflects in what proportion of the cases the positive predictions by the model are right. It informs about the accuracy of positive predictions. Therefore, high precision means that there will be a low number of false positives and is ideal for applications where false positives are expensive/time-consuming.

F1 Score

Among all the terms that can be classified as Top 30 Artificial intelligence terms everyone should know, it is vital to acknowledge the F1 Score as the metric that contributes to the evaluation of the performance of machine learning models. That is why the F1 Score is crucial, especially in cases where both precision and recall are critical, for example in identifying diseases or SW fraud actions.

Understanding Precision and Recall

Both precision and recall are also highlighted as one of the Top 30 Artificial intelligence terms everyone should know. Precision is used to quantify the percentage of positive predictions made by the model accurately, while recall evaluates the model’s efficiency in identifying all the patterns in the particular data set. The F1 Score reconciles these two characteristics, providing a single figure that reflects the degree of the two – precision and recall.

ROC Curve

The ROC Curve is considered a must-know concept in AI, especially within the list of Top 30 Artificial intelligence terms everyone should know. Specifically, the ROC curve is defined as the plot of the sensitivity against the false positive rate of a binary classifier.

Importance of ROC Curve

ROC Curve is one of the important concepts used in machine learning and artificial intelligence. It depicts the output of a classification model across all classification thresholds, putting it among the 30 crucial Artificial intelligence terms everyone should know. This curve plots two parameters: Also briefly mentioned were True Positive Rate (TPR) and False Positive Rate (FPR).

Neural Network Layers are Input Layer, Hidden Layer and Output Layer.

Neural network layers must be properly explained when one is exploring Top 30 Artificial intelligence terms everyone should know. Neural networks are the backbone of many AI systems, structured in three primary layers: This layering can be classified into four layer categories; input layer, hidden layer, and output layer.

Input Layer

Input layer is that layer of the neural network which takes the input information in it. For each neuron in this layer, it points to one of the features of the dataset in its parameter. For instance, neurons can be as small as pixels in image recognition assignments whereby the pixel might be a neuron. This layer is important since it provide the basis for the data processing that happens in the hidden layers.

Activation Function

Looking at the field of Machine learning and Neural networks, The Activation function is one among the list of 30 AI terms that everyone should know about AI. These functions are very important in deciding the next value of the output which has relation with the neural network learning capability of the complex patterns present in the data set.

Purpose of Activation Functions

The purpose of activation functions is to bring non-linearity into the model’s expression so that it can learn the more complex features inherent in the data. If these functions are missing the neural network would be like a linear model, which of course would be highly limiting.

Epochs and Batch Size

In the list of the Top 30 Artificial intelligence terms everyone should know, two critical aspects that may be mentioned are “Epochs” and “Batch Size”. These terms are very basic and important for anyone who wants to have an input on artificial intelligence and machine learning.

Epochs

Epochs are an important entity in the training process of the networks of the neural type. Epoch in deep learning terms is a complete pass done over the entire training dataset. In the latter cycle, the model gets trained and attempts to adjust the weights to improve the possibility of the outcomes. In fact, the term ‘epoch’ basically implies a period of learning during which the model acquires knowledge from data. As a rule, training is performed with several Epochs to increase the model’s accuracy and productivity. Epochs may take different numbers depending on aspects such as the complexity of the model or size of the dataset to be used.

Transfer Learning

Scholars commonly associate Top 30 Artificial intelligence terms everyone should know with Transfer Learning, one of the most valuable notions for analyzing the AI field. Transfer Learning involves using previous training to modify a new one that is similar to the first one. This makes the training process faster since a large part of the data and computations can be omitted.

Understanding Transfer Learning

Among the widely known terms about Artificial Intelligence, which can total up to Top 30 AI terms every layman should know, Transfer Learning is notable for its effectiveness. Unlike brand new learning, in Transfer Learning, the information that is acquired in another task is utilized.

AI Ethics and Bias

The top 30 Artificial intelligence terms everyone should know is quite often associated with the heating topics such as AI ethics and bias. These concepts are important to grasp because often they form the basis of how AI systems are created and used.

Importance of AI Ethics

AI ethics deals with concepts that act as a framework in the creation and implementation of artificial intelligence solutions. It makes sure that the future AI is not inherent discriminative and it will be conformable to certain general perspectives such as its fairness, its freedom from opacity, and its ability to answer for its actions. Focusing on the aspect of ethic, we will be able to manage or eliminate or possible negatives that may come with the use of AI.

The Future of AI

Top 30 AI terms that every person should know gives a reader a starting point as he wades through the AI jungle. It is important for anyone who wants to learn about the progress and the implication of the AI Technologies to understand these terms.

Machine Learning

Artificial Intelligence has subcategories; one of them is Machine Learning, which is the ability of a system to learn from previous experience without being specifically programmed.

Neural Networks

Neural Networks are computational techniques based on neurones fundamental to perform deep learning tasks.

Deep Learning

Deep Learning is a type of neural network with numerous layers, which make it easier to solve multiple layers of operations on the received data.

Natural Language Processing

Natural Language Processing (NLP) can be defined as the ability by which systems are able to process human language and respond to it in the right manner.

Computer Vision

Computer Vision is when an application or a device can look at an image and have the ability to make a certain decision which would be based on what the application or the device sees.

Artificial General Intelligence

Artificial general intelligence (AGI) is the capability of machines that can effectively learn and reason different things.